For the past few weeks, I’ve been building a personal chatbot. It’s an exploration into AI app development and different patterns. From the UX challenges to server-client tooling to backend architecture choices. This blog post documents parts of that journey, with code snippets and links to resources I found useful throughout the process.

The Inspiration ✨

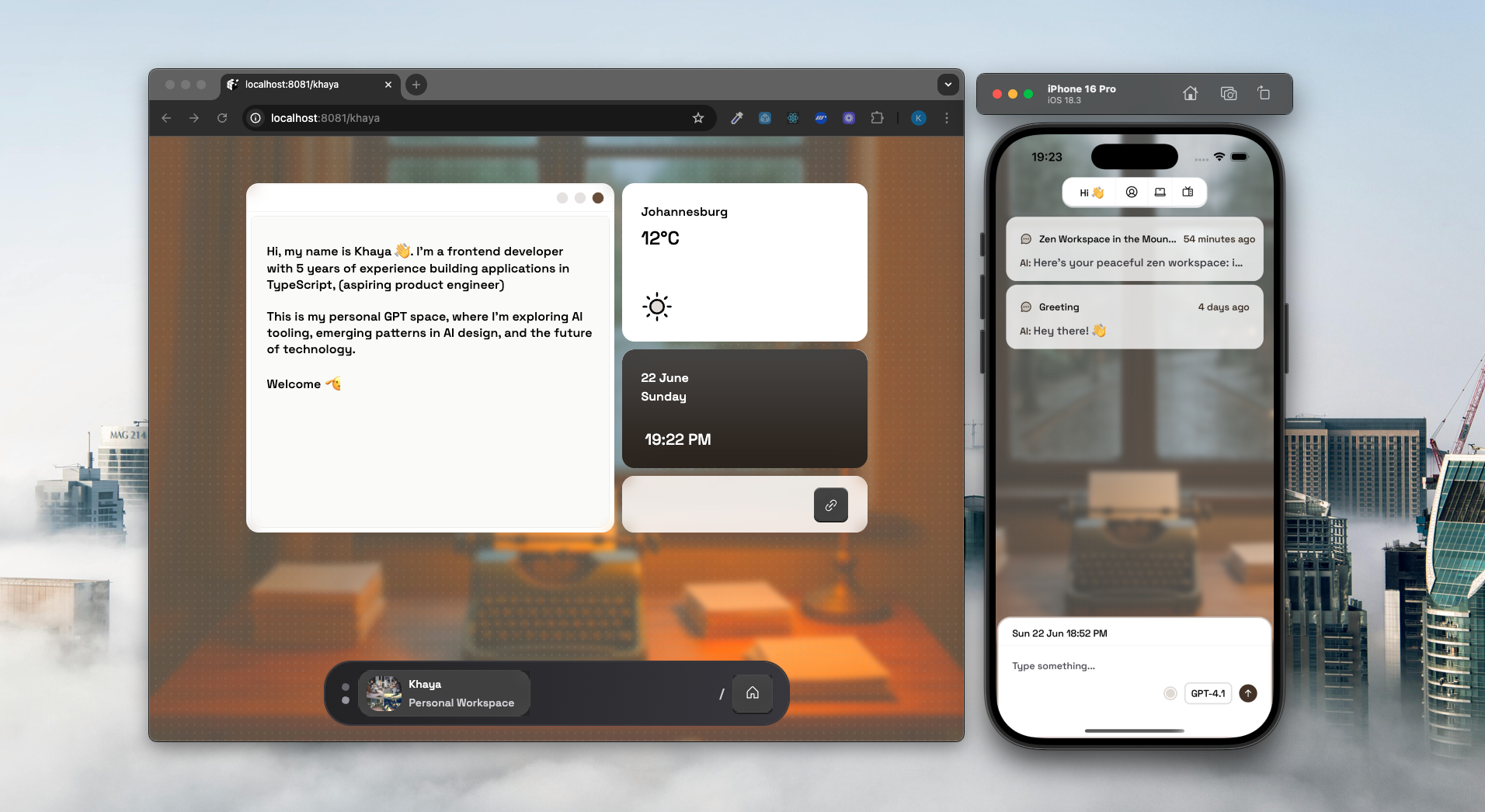

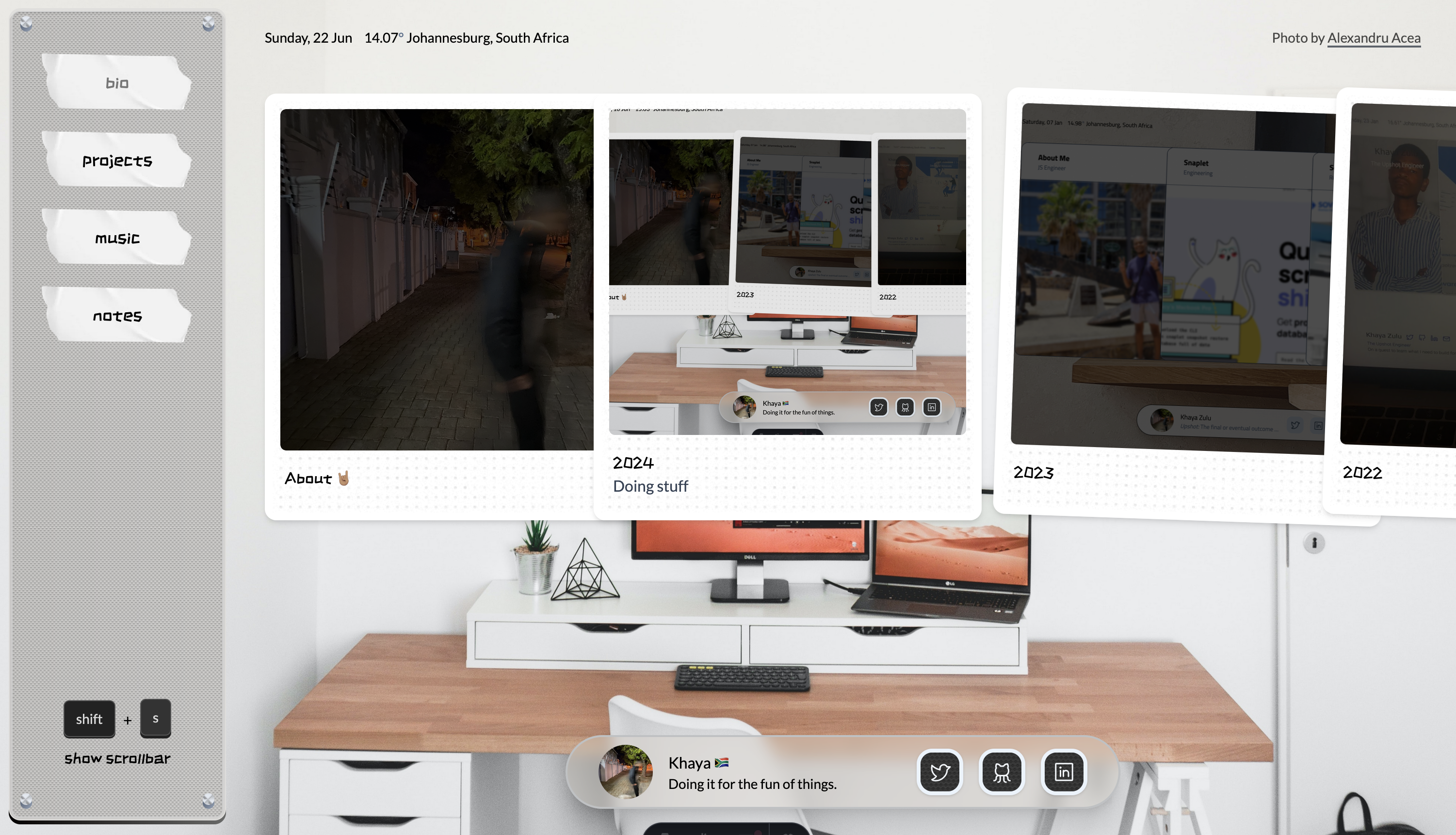

I want to start by sharing the inspiration behind this project: my personal website . The idea revolves around workspaces. As engineers, we spend a significant amount of time at our desks. I wanted my website to reflect that and with AI maybe even serve as a smart link-in-bio.

So when OpenAI announced their image generation API , my mind immediately jumped to the idea of creating infinite, personalized workspaces (each generated from a prompt). That gave birth to kayaGPT , a chatbot that felt personal to me, and one I could take anywhere.

Using the OpenAI API to generate a workspace image.

import { toFile } from "openai"; // get reference image const referenceImageResponse = await fetch("..."); // download reference image as a blob const blob = await referenceImageResponse.blob(); const result = toFile(blob, null, { type: "image/png" }); // generate a workspace image const openai = new OpenAI({ ... }); const imageResponse = await openai.images.edit({ model: "gpt-image-1", image: [result], prompt: "...generation instructions... A workspace overlooking the Jozi skyline.", });

Globally Distributed Universal Apps

React Native + Expo

Although it still has some way to go, web support in the React Native ecosystem has come a long way and with Expo as a framework, it’s even better.

For example, I used a package called react-native-image-colors , which fetches prominent colors from an image by leveraging platform-specific APIs:

- For Android: the Palette API .

- For Web: node-vibrant .

- For iOS: UIImageColors swift package .

This meant locally I had to build the app for each specific platform using npx expo run:ios|android|web, but it was still very cool to see it all work.

Once a workspace is selected, I use a color helper function to get tints and shades of the selected color (similar to this ). That color palette is then used throughout the app.

// user-summary.tsx const userSettings = useUserSettings(); return <LinearGradient colors={[ userSettings.colorSettings[100], userSettings.colorSettings[700] ]}

A few other packages I really enjoyed working with were expo-blur and expo-gradient . They provide blur and gradient effects that work smoothly across native and web (with minimal setup). I also explored Expo’s DOM components feature, which lets you use web UI components in native apps through a simple "use-dom" directive. I didn’t end up needing it for this project, but again another really cool feature.

- Tweet expressing my excitement about Expo Router’s future

- Talk: React Native 2030 by Fernando Rojo

User Experience

I’m particularly interested in the UI/UX layer of the AI application layer. kayaGPT gave me the chance to explore this topic deeper.

Here are a few questions I found myself asking:

- “Is this UI better suited for a voice agent?"

- "Can tasks like form submission be replaced with tool calls?"

- "Can my five-step onboarding flow just be a five-step conversation?”

I quickly landed on being as minimal (yet intentional) as possible, leaning heavily on the LLM’s capabilities like reasoning and tool-calling. It’s clear this space is in its early days, which is exciting, because there’s so much room to invent.

From a technical perspective, I’ve been leveraging a monorepo setup for this project. I have an AI package that is shared between the server and client, making it easy to build highly composable UI components that are derived from the use of an LLM call.

// backand/src/services/tools/new-workspace.ts import { createNewWorkspaceTool } from "@kgpt/ai/tools"; export const newWorkspaceTool = (env: Env, props: { userId: string }) => { return createNewWorkspaceTool(async ({ prompt }) => { // ... generate a workspace image return { workspaceKey: "...", prompt }; }); };// mobile/features/tool/new-workspace.tsx import type { NewWorkspaceToolOutput } from "@kgpt/ai/tools"; export const NewWorkspaceTool = ({ workspaceKey, prompt }: NewWorkspaceToolOutput) => {

- Talk: AI UX Design by Allen Pike

- Apple’s Machine Learning Design Guidelines

- Apple’s Generative AI

- Tweet by Patrick Collison (Stripe Co-founder). Reiterating the opporunities in this space.

Hono & Cloudflare ⛅️

While we’re still on the topic of UX, it’s important to highlight the role of backend infrastructure.

For AI UX, latency is king. If your voice agent takes two seconds to respond because it’s fetching information in the background, that extra delay negatively impacts the user experience.

For this project, I chose HonoJS , hosted on Cloudflare . Right now, Cloudflare’s ecosystem covers almost every layer of the modern AI stack, offering a great developer experience through features like Bindings and zero cold-start serverless functions via Workers .

Using the Workers AI , to comment on the weather.

import { createWorkersAI, WorkersAI } from "workers-ai-provider"; import { generateText } from "ai"; app.get("/weather", async (c) => { // ... const workersai = createWorkersAI({ binding: c.env.AI, }); const response = await generateText({ // use available open-source models https://developers.cloudflare.com/workers-ai/models/ model: workersai.model("@cf/meta/llama-3-8b-instruct"), prompt: `The temperature in ${weather.regionName} is ${temp}°C with a humidity of ${weather.humidity}%.`, system: `You are a helpful assistant that displays the current temperature and comments on the users current weather conditions in a friendly manner.\n The user's name: ${user.displayName}`, }); // ... });

Building with AI has made coding feel like play again. Frameworks like HonoJS and RedwoodSDK make hacking and experimentation genuinely enjoyable.

Conclusion

In terms of where the project stands, there’s still plenty of room for improvement. Some related to topics found in the blog post:

- UX improvements, like making onboarding flow fully voice-based (webrtc 🤘).

- Client-side tooling: Using LiveStore’s expo adapter to bring offline capability or exploring local models with React Native Executorch .

- Architecture upgrades: introducing more event-driven interactions powered by Cloudflare Workflows service.

Finally I encourage you start now: build something for yourself. Foundation models have introduced a major technology shift that every developer, whether in frontend, security or even devops will end up playing a part in.

It’s important to remember: we’re still early and that the opporunity lies in the domain knowledge and creativity you can bring to the table.

~ Peace ✌️